It’s the same as glxgears but for EGL and Wayland. It tests that OpenGL works.

Max-P

- 1 Post

- 506 Comments

6·7 days ago

6·7 days agoThe issue DNS solves is the same as the phone book. You could memorize everyone’s phone number/IP, but it’s a lot easier to memorize a name or even guess the name. Want the website for walmart? Walmart.com is a very good guess.

Behind the scenes the computer looks it up using DNS and it finds the IP and connects to it.

The way it started, people were maintaining and sharing host files. A new system would come online and people would take the IP and add it to their host file. It was quickly found that this really doesn’t scale well, you could want to talk to dozens of computers you’d have to find the IP for! So DNS was developed as a central directory service any computer can request to look things up, which a hierarchy to distribute it and all. And it worked, really well, so well we still use it extensively today. The desire to delegate directory authority is how the TLD system was born. The host file didn’t use TLDs just plain names as far as I know.

3·7 days ago

3·7 days agoThere’s definitely been a surge in speculation on domain names. That’s part of the whole dotcom bubble thing. And it’s why I’m glad TLDs are still really hard to obtain, because otherwise they would all be taken.

Unfortunately there’s just no other good way to deal with it. If there’s a shared namespace, someone will speculate the good names.

Different TLDs can help with that a lot by having their own requirements. .edu for example, you have to be a real school to get one. Most ccTLDs you have to be a citizen or have a company operating in the country to get it. If/when it becomes a problem, I expect to see a shift to new TLDs with stronger requirements to prove you’re serious about your plans for the domain.

It’s just a really hard problem when millions of people are competing to get a decent globally recognized short name, you’re just bound to run out. I’m kind of impressed at how well it’s holding up overall despite the abuse, I feel like it’s still relatively easy to get a reasonable domain name especially if you avoid the big TLDs like com/net/org/info. You can still get xyz for dirt cheap, and sometimes there’s even free ones like .tk and .ml were for a while. There’s also several free short-ish ones, I used max-p.fr.nf for a while because it was free and still looks like a real domain, it looks a lot like a .co.uk or something.

8·7 days ago

8·7 days agoBecause if they’re not owned, then how do you know who is who? How do we independently conclude that yup,

microsoft.comgoes to Microsoft, without some central authority managing who’s who?It’s first come first served which is a bit biased towards early adopters, but I can’t think of a better system where you go to

google.comand reliably end up at Google. If everyone had a different idea of where that should send you it would be a nightmare, we’d be back to passing IP addresses on post-it notes to your friends to make sure we end up on the sameyoutube.com. When you type an address you expect to end up on the site you asked, and nothing else. You don’t want to end up on Comcast YouTube because your ISP decided that’s whereyoutube.comgoes, you expect and demand the real one, the same as everyone else.And there’s still the massive server costs to run a dictionary for literally the entire Internet for all of that to work.

A lot of the times, when asking those kinds of questions, it’s useful to think about how would you implement it such that it would work. It usually answers the question.

10·7 days ago

10·7 days agoIn case you didn’t know, domain names form a tree. You have the root

., you have TLDscom., and then usually the customer’s domaingoogle.com., then subdomainswww.google.com.. Each level of dots typically hands over the rest of the lookup to another server. So in this example, the root servers tell you go ask .com at this IP, you go ask .com where Google is, and it tells you the IP of Google’s DNS server, then you query Google’s DNS server directly. Any subdomain under Google only involves Google, the public DNS infrastructure isn’t involved at that point, significantly reducing load. Your ISP only needs to resolve Google once, then it knows how to get*.google.comdirectly from Google.You’re not just buying a name that by convention ends with a TLD. You’re buying a spot in that chain of names, the tree that is used to eventually go query your server and everything under it. The fee to get the domain contributes to the cost of running the TLD.

18·7 days ago

18·7 days agoMostly because you need to be able to resolve the TLD. The root DNS servers need to know about every TLD and it would quickly be a nightmare if they had to store hundreds of thousands records vs the handful of TLDs we have now. The root servers are hardcoded, they can’t easily be scaled or moved or anything. Their job is solely to tell you where .com is, .net is, etc. You’re supposed to query those once and then you hold to your cached reply for like 2+ days. Those servers have to serve the entire world, so you want as few queries to those as possible.

Hosting a TLD is a huge commitment and so requires a lot of capital and a proper legal company to contractually commit to its maintenance and compliance with regulations. Those get a ton of traffic, and users getting their own TLDs would shift the sum of all gTLD traffic to the root servers which would be way too much.

With the gTLDs and ccTLDs we have at least there’s a decent amount of decentralization going, so .ca is managed by Canada for example, and only Canada has jurisdiction on that domain, just like only China can take away your .cn. If everyone got TLDs the namespace would be full already, all the good names would be squatted and waiting to sell it for as much as possible like already happens with the .com and .net TLDs.

There’s been attempts at a replacement but so far they’ve all been crypto scams and the dotcom bubble all over again speculating on the cool names to sell to the highest bidder.

That said if you run your own DNS server and configure your devices to use it, you can use any domain as you want. The problem is gonna get the public Internet at large to recognize it as real.

WireGuard works great for that.

13·9 days ago

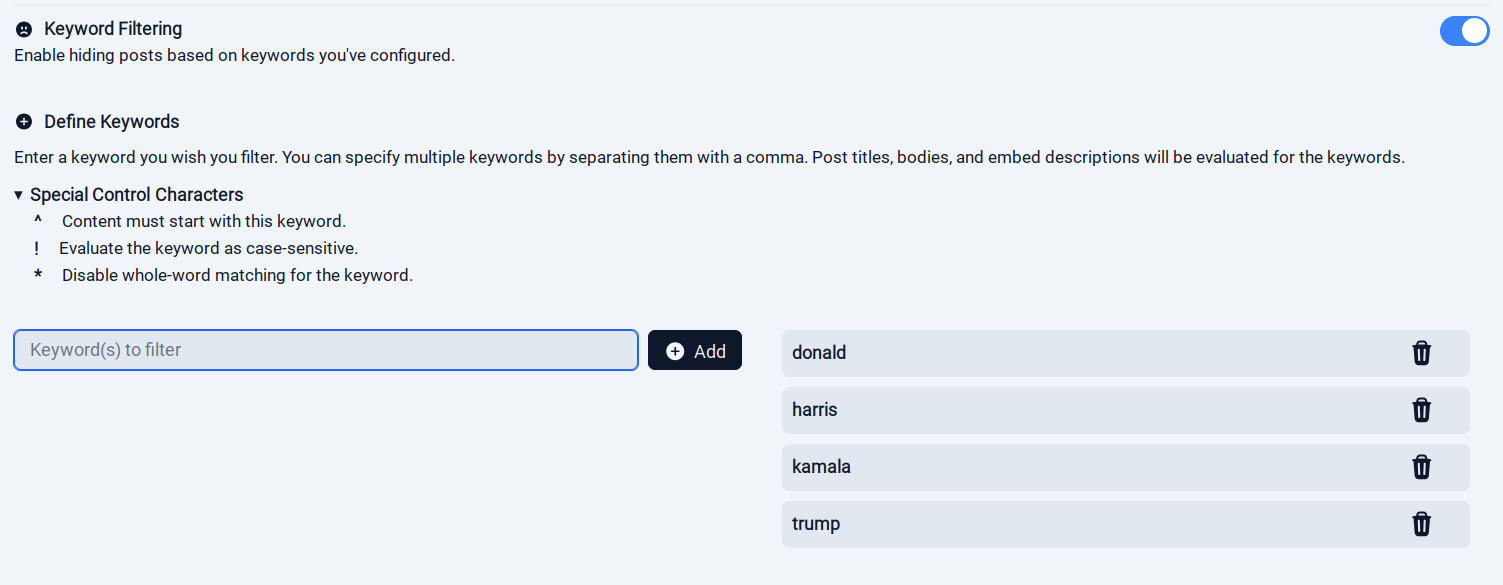

13·9 days agoNot sure if Voyager exposes such a setting (probably?), but on Tesseract I’d do something like this:

691·9 days ago

691·9 days agoVoyager for Lemmy, at least for me, pushes political content like crazy.

No content is being pushed to anyone, Lemmy’s algorithms are very simple. It’s just there’s a lot of it.

You can unsubscribe from or block the politics and news communities, especially worldnews, and it should get rid of a lot of it. I find the experience to be better when subscribing to the stuff you want rather than remove the stuff you don’t want.

And also do it now because it’s likely about to get a whole lot more difficult to access to services soon.

Ah I see. I wasn’t using those as job suggestions but rather examples of jobs that are considered “loser” jobs and use those to drive the point that they’re important too, and there’s nothing wrong with ending up with those for your whole life. A job’s purpose is to give you money. Trying to comfort OP by removing the “loser” label and not focus as much on “well you could go flip burgers” because that just doesn’t help the emotional side of the situation.

I wish her to aspire to do a little more than those, but even assuming she’s as dumb as she claims to be, there’s still options, some granted less good than others, but just as important to society. I do think she’s got more potential than she thinks though, she reminds me a bit of my wife when I met her and now she’s competing with me as DevOps/SRE.

As another example: I’m autistic, I struggle with a lot of things. I make frequent use of DoorDash, I also hire cleaners every now and then to clean, and handymen to repair stuff in the house I just can’t deal with on my own. All of those jobs, people shit on continuously, and me too because I can’t manage some quite basic tasks. But using those services let me focus on what I’m good at, which is keeping thousands of computers happy. I make a fair bit of money which gets shared with all those that support me in being a productive member of society: cooks, waiters, delivery drivers, cleaners, trades. It’s good for the economy, it’s good for society. Those people deserve respect for their somewhat hidden contributions. Not having those people would ultimately make me fail, that would drag my employer with it, other companies wouldn’t have the software they need to operate.

Every one that contributes to society is important and valueble, whether people recognize it or not.

Yeah that’s an intentionally extreme example. I’ve also seen garbage truck drivers being used in that context. The point is more that we need people on all tiers of jobs, even the literal shit ones. We need truck drivers, we need train drivers, we need people to pick up crops, we need people to take the trash out, we need people to maintain the sewers, we need people to empty out sceptic tanks. They’re critical infrastructure, and one shouldn’t feel bad because they ended up being a garbage truck driver. If you want a cozy repeated job and come home at 5 to your kids and family, that’s perfectly acceptable.

We put way too much emphasis on “success” and its connection to highly educated and high paying jobs.

IMO the fact that fastfood jobs are considered temporary bootstrap jobs that you’re expected to be exploited to hell is bullshit and an indication of the absolutely broken moral compass of the corporate world. We could do without fast food, but that doesn’t mean we should pay them them minimum wage. Everyone deserves a livable wage no matter what they do.

I wouldn’t exclude lower IQ as that major of a problem. Sure maybe it kind of excludes you from being an engineer or a lawyer or a doctor and these kinds of jobs. But there’s plenty of low education jobs around, and there’s no shame in that. If everyone was engineers and lawyers we’d have major problems keeping shops and fastfood open. My dad didn’t finish school and raised me no problem, and lives fine. He might not be good at math or writing, but it’s plenty for woodworking and being a handyman.

As others have already pointed out, you’re articulate and sound smarter than a bunch of people I’ve seen on Lemmy. I mean hell, you found your way into Lemmy, a platform that’s still fairly niche and filled with nerds. You could have gone to Reddit but you came to the fediverse.

Everyone have their strengths and things they’re good at. Finding what you like to do is a good start. Some people inherently take artistic paths, and art has nothing to do with intelligence. What you need to do is figure out what you like to do that’s pleasant and satisfying for you to do, and get out of your head that you have to go to higher education.

Also worth noting, you mentioned ADHD. If you’re not diagnosed for it or treated for it, in itself that can significantly lower your IQ scores especially if not accounting for that. When I had my ADHD assessment, they spent time measuring exactly how much my cognitive performance declines under conditions harsh for ADHD. I swear I struggled to figure out how to take the bus after that because I was so fried, was very glad I was too lazy to take the car that day. They noted, initially being well rested I performed really well then my performance tanked the moment they started hammering the ADHD. It’s also important to understand IQ measures only one thing: intelligence. It doesn’t measure empathy, communication, art, or anything else. That might limit you for intellectual jobs, but you can still be great a people jobs. You could be HR, you could be sales, you could be support. Some of the best artists I know failed school hard.

Stop being jealous and ashamed. Those that shame you can go to hell, all they do is make you think you’re worthless and inferior to them. Find your own path.

2·10 days ago

2·10 days agoYeah it’ll depend on how good your coreboot implementation is. AFAIK it’s pretty good on Chromebooks because Google whereas a corebooted ThinkPad might have some downsides to it.

The slowdowns I would attribute to likely bad power management, because ultimately the code runs on the CPU with no involvement with the BIOS unless you call into it, which should be very little.

Looking up the article seems to confirm:

The main reason it seems for the Dasharo firmware offering lower performance at times was the Core i5 12400 being tested never exceeded a maximum peak frequency of 4.0GHz while the proprietary BIOS successfully hit the 4.4GHz maximum turbo frequency of the i5-12400. Meanwhile the Dasharo firmware never led to the i5-12400 clocking down to 600MHz on all cores as a minimum frequency during idle but there was a ~974MHz.

I’d expect System76 laptops to have a smaller performance gap if any since it’s a first-party implementation and it’s in their interest for that stuff to work properly. But I don’t have coreboot computers so I can’t validate, that’s all assumptions.

That said for a 5% performance loss, I’d say it counts as viable. My games VM has a similar hit vs native. I’ve been gaming on Linux well before Proton and Steam and have taken much larger performance hits before just to avoid closing all my work to reboot for break time games.

16·12 days ago

16·12 days agoYes dual GPU. I set that up like 6 years ago, so its use changed over time. It used to be Windows but now it’s another Linux VM.

The reason I still use it is it serves as a second seat and is very convenient at that. The GPU’s output is connected to the TV, so the TV gets its own dedicated and independent OS. So my wife can use it when I’m not. When the VM isn’t running I use the card as a render offload, so games get the full power of the better card as well.

I also use it for toying with macOS and Windows because both of those are basically unusable without some form of 3D acceleration. For Windows I use Looking Glass which makes it feel pretty native performance. I don’t play games in it anymore but I still need to run Visual Studio to build the Windows exes for some projects.

This week I also used the second card to test out stuff on Bazzite because one if my friends finally made the switch and I need to be able to test things out in it as I have no fucking clue how uBlue works.

40·12 days ago

40·12 days agoThe BIOS does a lot less than you’d expect, it doesn’t really have an impact on gaming performance. For what it’s worth, I’ve been gaming in a VM for years, and it uses the TianoCore/OVMF/EDK2 firmware, and no issues. Once Linux is booted, it doesn’t really matter all that much. You’re not even allowed to use firmware services after the OS is booted, it’s only meant for bootloaders or simple applications. As long as all the hardware is initialized and configured properly it shouldn’t matter.

233·20 days ago

233·20 days agoIt’s nicknamed the autohell tools for a reason.

It’s neat but most of its functionality is completely useless to most people. The autotools are so old I think they even predate Linux itself, so it’s designed for portability between UNIXes of the time, so it checks the compiler’s capabilities and supported features and tries to find paths. That also wildly predate package managers, so they were the official way to install things so there was also a need to make sure to check for dependencies, find dependencies, and all that stuff. Nowadays you might as well just want to write a PKGBUILD if you want to install it, or a Dockerfile. Just no need to check for 99% of the stuff the autotools check. Everything it checks for has probably been standard compiler features for at least the last decade, and the package manager can ensure you have the build dependencies present.

Ultimately you eventually end up generating a Makefile via M4 macros through that whole process, so the Makefiles that get generated look as good as any other generated Makefiles from the likes of CMake and Meson. So you might as well just go for your hand written Makefile, and use a better tool when it’s time to generate a Makefile.

(If only c++ build systems caught up to Golang lol)

At least it’s not node_modules

Yeah, and you’re pinging from server to client with no client connected. Ping from the client first to open the connection, or set keep alives on the client.

Your peer have no endpoint configured so the client needs to connect to the server first for it to know where the client is. Try from the client, and it’ll work for a bit both ways.

You’ll want the persistent keepalive option on the client side to keep the tunnel alive.

Same for KDE https://apps.kde.org/fr/kjournaldbrowser/