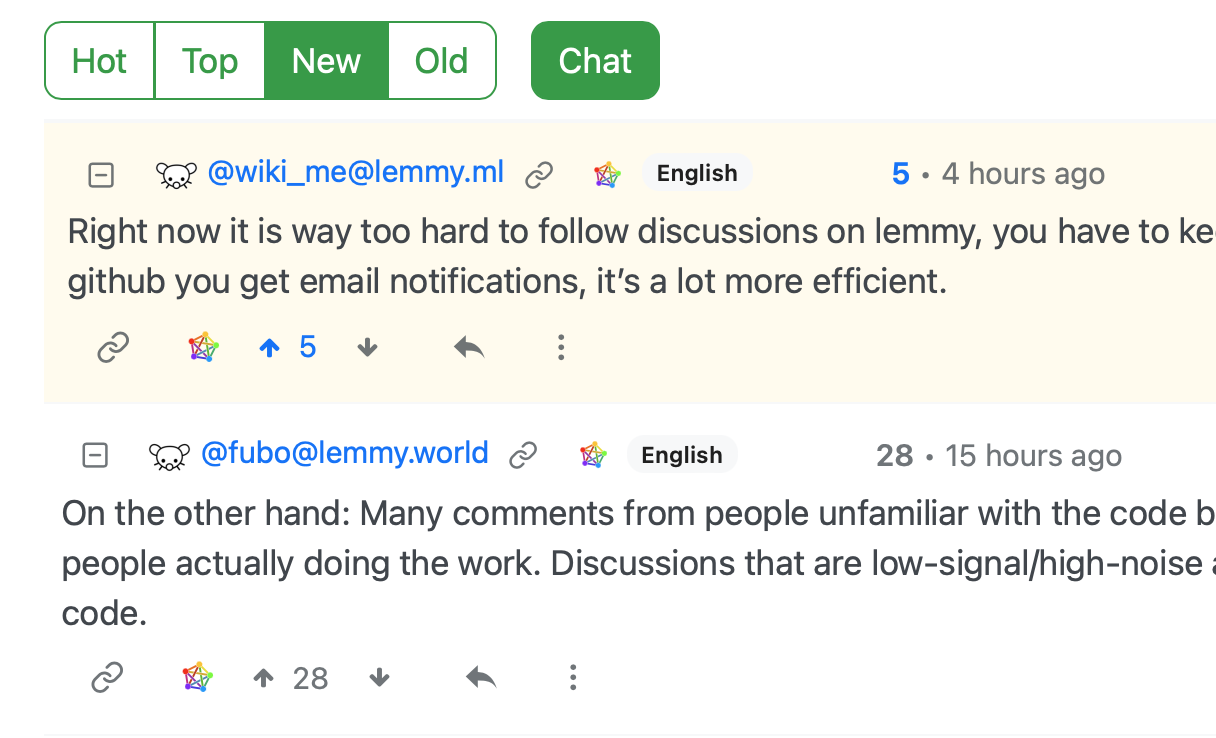

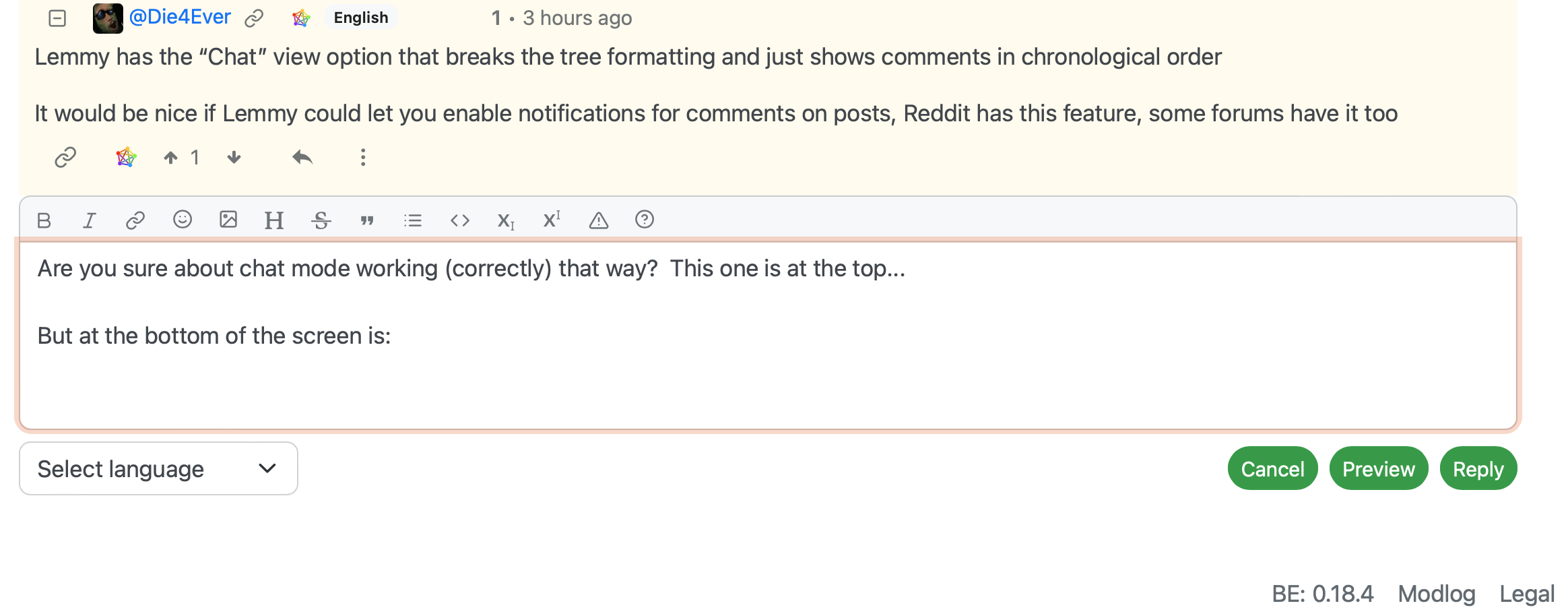

Are you sure about chat mode working (correctly) that way? This one is at the top…

But at the bottom of the screen is:

This doesn’t appear to be in chronological order.

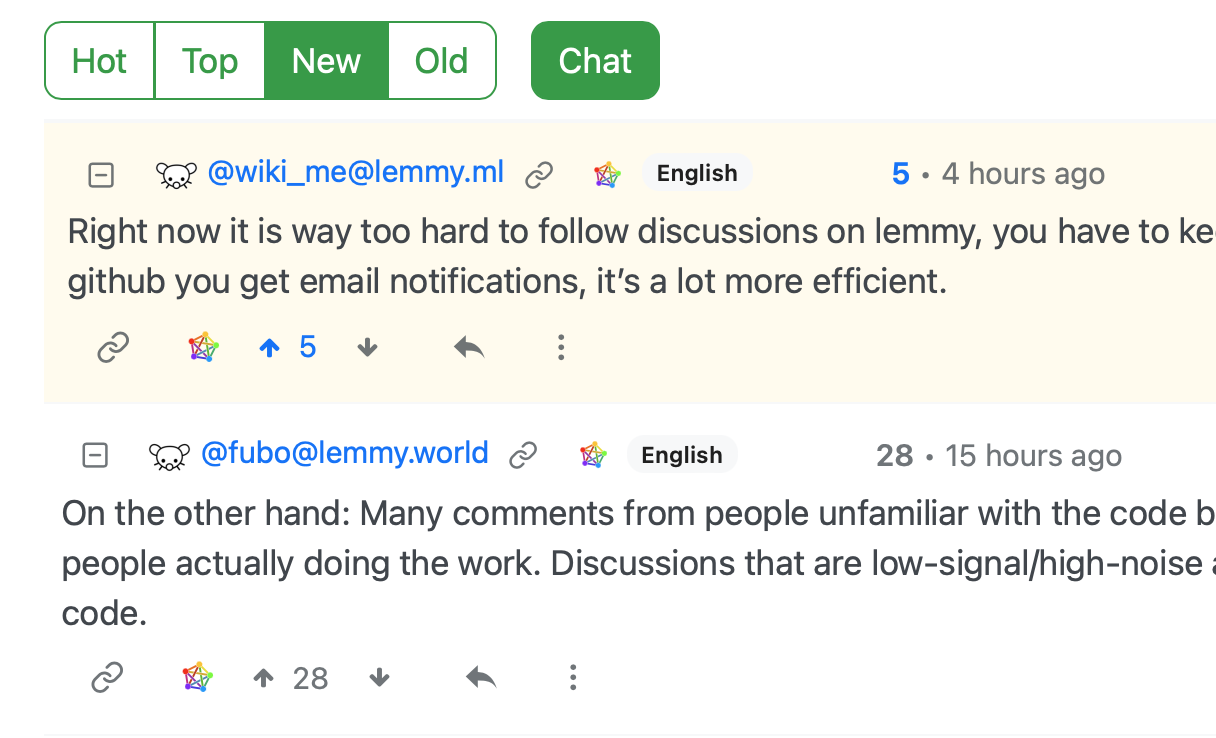

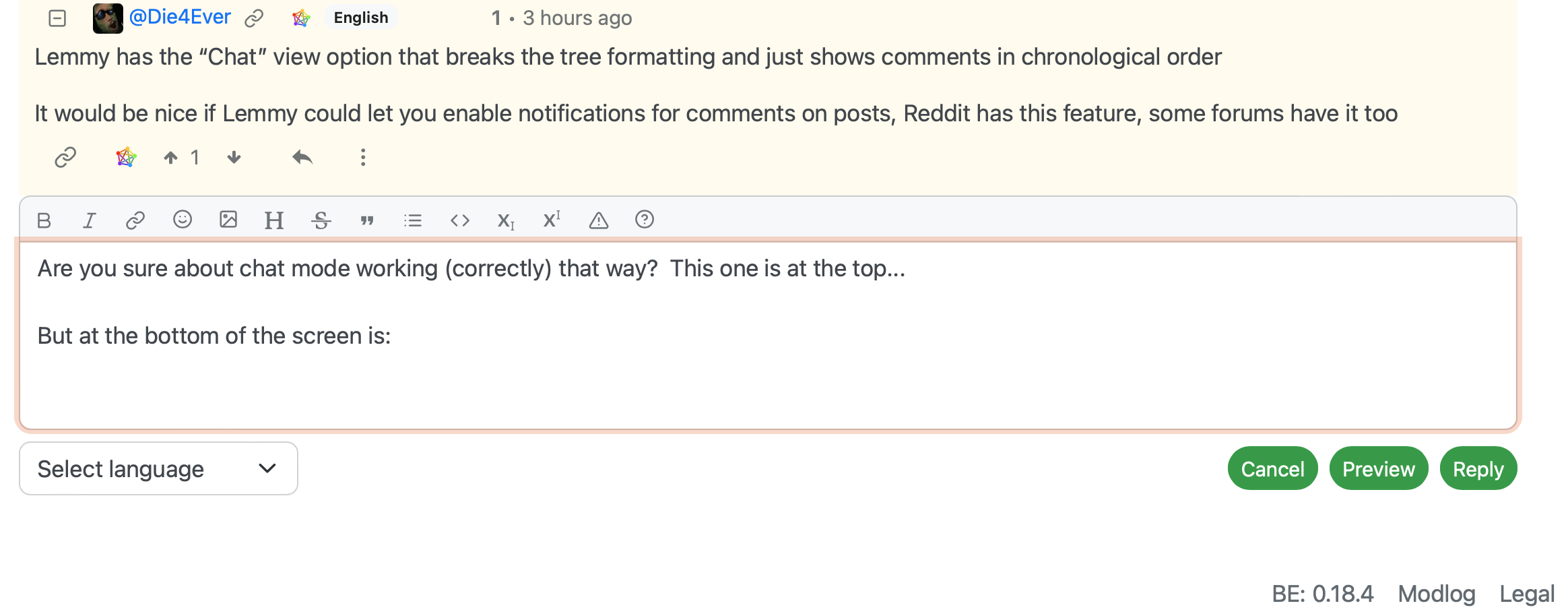

Are you sure about chat mode working (correctly) that way? This one is at the top…

But at the bottom of the screen is:

This doesn’t appear to be in chronological order.

No.

The nature of the checksums and perceptual hashing is kept in confidence between the National Center for Missing and Exploited Children (NCMEC) and the provider. If the “is this classified as CSAM?” service was available as an open source project those attempting to circumvent the tool would be able to test it until the modifications were sufficient to get a false negative.

There are attempts to do “scan and delete” but this may add legal jeopardy to server admins even more than not scanning as server admins are required by law to report and preserve the images and log files associated with CSAM.

I’d strongly suggest anyone hosting a Lemmy instance to read https://www.eff.org/deeplinks/2022/12/user-generated-content-and-fediverse-legal-primer

The requirements for hosting providers are https://www.law.cornell.edu/uscode/text/18/2258A

(a) Duty To Report.—

(1) In general.—

(A) Duty.—In order to reduce the proliferation of online child sexual exploitation and to prevent the online sexual exploitation of children, a provider—

(i) shall, as soon as reasonably possible after obtaining actual knowledge of any facts or circumstances described in paragraph (2)(A), take the actions described in subparagraph (B); and

(ii) may, after obtaining actual knowledge of any facts or circumstances described in paragraph (2)(B), take the actions described in subparagraph (B).

(B) Actions described.—The actions described in this subparagraph are—

(i) providing to the CyberTipline of NCMEC, or any successor to the CyberTipline operated by NCMEC, the mailing address, telephone number, facsimile number, electronic mailing address of, and individual point of contact for, such provider; and

(ii) making a report of such facts or circumstances to the CyberTipline, or any successor to the CyberTipline operated by NCMEC.

…

(e) Failure To Report.—A provider that knowingly and willfully fails to make a report required under subsection (a)(1) shall be fined—

(1) in the case of an initial knowing and willful failure to make a report, not more than $150,000; and

(2) in the case of any second or subsequent knowing and willful failure to make a report, not more than $300,000.

Child Sexual Abuse Material.

Here’s a safe for work blog post by Cloudflare for how to set up a scanner: https://developers.cloudflare.com/cache/reference/csam-scanning/ and a similar tool from Google https://protectingchildren.google

Reddit uses a CSAM scanning tool to identify and block the content before it hits the site.

https://protectingchildren.google/#introduction is the one Reddit uses.

https://blog.cloudflare.com/the-csam-scanning-tool/ is another such tool.

What happens if the posts were from an instance that was later defederated from the current instance?

Or was running an old (and now incomparable) version of the software?

If you have whitelisted *.mobile.att.net you’ve whitelisted a significant portion of the mobile devices in the US with no ability to say “someone in Chicago is posting problematic content”.

You’ve whitelisted 4.6 million IPv4 addresses and 7.35 x 1028 IPv6 addresses.

Why have a whitelist at all then?

Would you be able to post an image if neither *.res.provider.com nor *.mobile.att.com were whitelisted and putting 10-11-23-45.res.provider.com (and whatever it will be tomorrow) was considered to be too onerous to put in the whitelist each time your address changed?

If you’re whitelisting *.res.provider.com and *.mobile.att.com the whitelist is rather meaningless because you’ve whitelisted almost everything.

If you are not going to whitelist those, do you have any systems available to you (because I don’t) that would pass a theoretical whitelist that you set up?

Do you have a good and reasonable reverse DNS entry for the device you’re writing this from?

FWIW, my home network comes nat’ed out as {ip-addr}.res.provider.com.

Under your approach, I wouldn’t have any system that I’d be able to upload a photo from.

Try turning wifi off on your phone, getting the IP address, and then looking up the DNS entry for that and consider if you want to whitelist that? And then do this again tomorrow and check to see if it has a different value.

Once you get to the point of “whitelist everything in *.mobile.att.net” it becomes pointless to maintain that as a whitelist.

Likewise *.dhcp.dorm.college.edu is not useful to whitelist.

The devs (nutomic and dessalines) and the instances that they are involved with hosting and upkeep (lemmy.ml and lemmygrad.ml).

The amongi hidden everywhere along with blue corner are my two favorite parts of Reddit’s Place.

Google Groups is one interface to it… and likely the largest archive of unset (via Deja News).

You can find Usnet in other places such as https://www.eternal-september.org and getting a news reader.

While the name may be the same, different articles get posted to each… just that often the same article gets posted to all.

Furthermore, discussions within each /c/ involve a different set of people.

Someone posted a dumb meme to a /c that you subscribe to. It keeps getting comments and votes. If you go to https://lemmy.world/?dataType=Comment&listingType=Subscribed&page=1&sort=New (I think that’s the right link for you), 2/3 of the comments on the page are to that post you just want to ignore and be done with.

How do you get that post and its comments out of your feed (active posts and new comments) without blocking the user who created the post?

It depends on how… thematic local is.

On programming.dev, local is almost entirely things that are interesting to me. Some I’m less interested about (new version of emacs released), but everything else is stuff I’d possibly read.

If I was more into Star Trek, then startrek.website would be the place to be as everything in local there is Star Trek related.

When you get to a general instance, local is less cohesive and it is more people who picked a server rather than people who picked that server.

This also means that discovery of new content that is likely of interest is more challenging.

Two of the primary concerns for picking a server are ease of content discovery and confidence in server administration.

Most people aren’t interested in self hosting. It’s a chore to do patch updates. It’s a financial risk to have a cloud instance of some software running that could get hit with unexpected ingress, egress, or cpu charges… and still having to deal with patch updates.

Self hosting Lemmy is as practical as self hosting email for most people.

The tools that Usenet had to maintain its culture were insufficient compared to the spam and the arrival of the rest of the net (today is the 10925th day of September 1993). Moderation was based on barrier to entry (see also the moderation tooling of alt.sysadmin.recovery) or limited federation (bofh restricted hierarchy).

Combine this with the… reduction of, let’s call it ‘deep computer literacy’ with the techies - moving away from the command line and to web browsers and guis. This allowed people to get much of the content of the emerging web while drying up people arriving on Usenet.

While myspace and geocities allowed for the regular person to establish a web presence, these also were centralized systems. The web as a “stand up and self host” is far beyond the technical literacy level of most people… and frankly, those who do know how to do it don’t because keeping your web server up to date with the latest security patches or dealing with someone who is able to do an RCE on your AWS instance and run up your credit card bill is decidedly “not fun.”

And so, instead of running individual forums, you’ve got Reddit. It means that you don’t have to have deep knowledge of system administration or even if you do, spend your days patching servers in order to host and interact with people in the internet.

So, today…

New software need to be turnkey and secure by default. Without this, instability of smaller instances will result in single large instances being the default. Consider Wordpress… and you’ve got a few large servers that run for the regular person and do automated software updates and patching because I’ve got no business anymore running a php application somewhere without spending time doing regular patching. When (not if) Lemmy has a RCE security issue (and not just the “can inject scripts into places” level of problems but rather underlying machine compromised) there will be a “who is staying up to date with the latest patches for Lemmy and the underlying OS?” day of reckoning.

Communities (not /c/ but people) need to be able to protect the culture that is established through sufficient moderation tooling. The moderation tooling on Reddit is ok and supplemented by the reddit admins being able to take deeper actions against the more egregious problem users. That level of moderation tooling isn’t yet present for the ownership level moderation of a /c/ nor at the user level being able to remove themselves from interactions with other individuals.

Culture needs to be as something that is rather than something that is not. This touches on A Group is its Own Worst Enemy ( https://gwern.net/doc/technology/2005-shirky-agroupisitsownworstenemy.pdf ) which I highly recommend. Pay attention to Three Things to Accept and Four Things to Design For. Having a culture of “this is not reddit, but everything we are doing is a clone of reddit” is ultimately self defeating as Conway’s Law works both ways and you’ll get reddit again… with all the problems of federation added in (the moderation one being important).

On that culture point, given federation it is even more important to establish a positive culture (though not toxic positivity has its own problems). The culture of discontents swearing because they can and there’s no moderation to say no or the equivalent of elder statesmen to establish and maintain a tone (tangent: very lightly moderated chat on a game I play has a distinctly different tone if the ‘elder statesmen’ of that particular section of the game are present and chatting or not… just being there and being reasonable and polite has the effect of discouraging trolls - its no fun to troll people who won’t get mad at you, and people seek to be as good as the elder statesmen of the channel).

So, as long as Lemmy is copying Reddit (and Mastodon is copying Twitter - though they’re doing a better job of not copying it now), and moderation isn’t solved, and the core group (read A Group) isn’t sufficiently empowered to set the tone. Without a sufficiently large user base to engage with (and be able to discover other places as appropriate if one /c/ isn’t to one’s liking), blocking users is less palatable and the seeing a larger percentage of messages being ones that you’d rather not interact with… you’d leave. If you sat down at the bar and the guy next to you is swearing every other word because they can and the bartender won’t throw them out - you leave and avoid going back to that bar. Same is true of social media. Mastodon has the advantage that your’e interacting with individuals rather than communities.

On reddit, on subs I moderate (yes, I’m still there), I’ve got auto mod set up to filter all vulgarity. I approve nearly all of it, but it has also let me catch problems that are getting heated in word choice… and I can say “nope”, delete the comments (all the way down to the root) that are setting the wrong tone for the sub. And I’ve only had to do that twice in the past year.

So… there’s my big point. The rate of new people joining has to be equal to or greater than the people who leave because of cultural or technical reasons. Technical reasons are fixed by fixing the software. Cultural ones are done by giving the tools for moderation. And if a given community starts causing evaporation of people because local or all on an instance becomes not something that you’d enjoy seeing, the culture of the admins needs to be sufficiently empowered to boot it. The ideal of “anything as long as it isn’t illegal - we don’t censor anything” often results in a culture of the site that isn’t enjoyable to be part of. A ‘dangerous’ part of the fediverse is that that culture can spread to other instances much more easily.

… And that’s probably enough rambling now. Make sure you read A Group is its Own Worst Enemy though. While it was something from nearly two decades ago - the things that it talks of are timeless and should not be forgotten when designing social software.

I’m not going to say “no”, but NSFW filtering is done by a user supplied flag on an item.

There is work that is being done to add an auto mod… https://github.com/LemmyNet/lemmy/issues/3281 but that’s different than a cancel bot approach that Usenet uses.

Not saying it is impossible, just that the structure seems to be trying to replicate Reddit’s functionality (which isn’t federated) rather than Usenet’s functionality (which is federated)… and that trying to replicate the solution that works for Reddit may work at the individual sub level but wouldn’t work at the network level (compare: when spammers are identified on reddit their posts are removed across the entire system).

The Usenet cancel system is federated spam blocking (and according to spammers of old, Lumber Cartel censorship).

If the appearance of some social media is “there is reasonable discussion but as soon as something shows up that’s awful, discussion stops there, but it remains up for all to see” - that’s going to have difficulty brining in new people.

Instance blocking but still giving the appearance of condoning the content is going to lead to what appears to be toxic spaces to everyone who hasn’t taken the time to carefully cultivate their block lists.

Ignoring and personally blocking users and instances means that admins and moderators won’t have as good of a view of the health of the communities when everything ends with a troll comment that no one but new users see.