Damn now I have to boycott an entire video connector.

DisplayPort is superior anyway.

All my homies use DisplayPort

If TVs would have it that would be nice. I use a PC attached to my TV and don’t have the option.

There was a panasonic (I think it was them) that had a Displayport connection, but that didn’t last.

I suspect HDMI threatened to cut their licence if they kept putting DP on the TVs.

The lg oled screens from c1 and up all have displayport.

Currently using displayport with my pc on an lg c2.

It doesn’t according to LG’s product page at least.

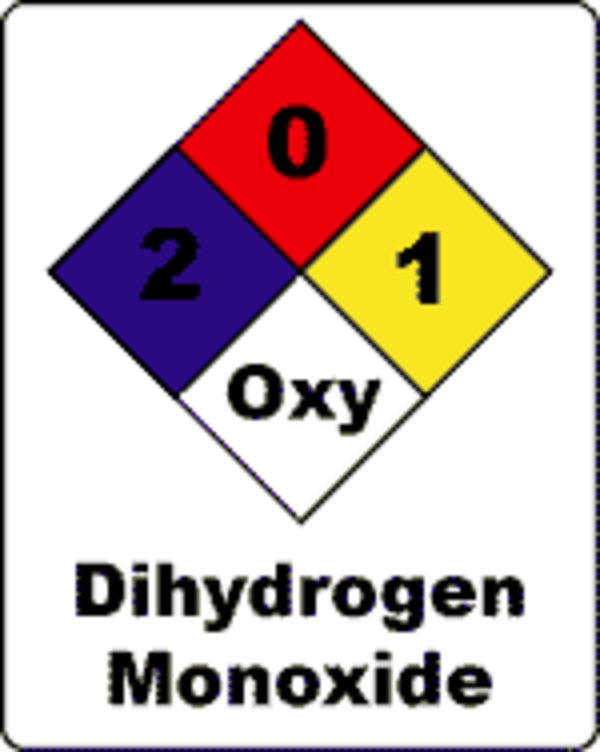

Also, wtf is this spec:

Yes

Um. You got a picture of that plugged into the tv?

I’ve never seen that on the LGs I’ve seen, and I’m an AV technician.

I’ve been out of the field for a year or so but I had never seen DP on residential stuff either.

Broadcast and stuff like that, sure. But not on resi

As someone who owns an LG C1, not a single DP in sight.

I also have a C2 that I use with a PC and It does not have a single DP port.

TVs are not very good displays for computers. You have to go through a bunch of settings to turn off as much image processing as possible. Even then, the latency will still be higher than a gaming monitor. You have to disable overscan, which always seems to be on by default even though it’s only useful for CRTs and makes absolutely no sense for a digital display with digital signals. Also, I’ve never had a single TV that will actually go into standby when commanded. It will just stay on with a no signal message when the computer turns off the output.

Mine doesn’t have any of those problems. The only thing I had to do was ensure the input selection was set to PC.

But then I’m using it 90% to stream stuff that is web only and some occasional gaming with the bonus of being able to fill out forms, Google and do light note taking without getting a different device. My alternatives would be needing a laptop or tablet along with some streaming device.

Yeah most TV’s I’ve seen either have a PC setting, or have a game mode that just turns everything off.

“Monitors” are smaller.

And the minimum cost of entry to anything reasonably sized is double to triple. Changing some settings is well worth it.

Why?

I’ve never understood the difference other than I get the vague sense display port is associated with apple.

DisplayPort is designed to give your GPU a fast connection to your display. HDMI is designed to give the copyright holder of the video you are watching a DRM protected connection to your display to make piracy harder.

This is why DisplayPort is better for the consumer but HDMI is more popular because the device manufacturers are really in charge of what you get.

DP has HDCP too. I get what you’re saying, but there was more profiteering involved even than what you describe.

HDMI is designed to give the copyright holder of the video you are watching a DRM protected connection

Care to explain how one feature added years in defines what the entire thing is “designed for”? HDMI had nothing related to drm for several years

Aside from DisplayPort having more bandwidth, as someone else pointed out, HDMI is consumer video garbage. DisplayPort was designed for use as a computer display. Also, HDMI Forum is a cartel that charges ridiculous licensing fees for their proprietary interface. VESA is a standards body and their licensing costs are much more reasonable.

HDMI 1.4 has a less than half the bitrate of the oldest DisplayPort standard. And regarding the newer HDMI standard: read the article. The forum are being jerks…

The only disadvantage of display port that I can think of is that it’s harder to find capture devices (or at least Linux compatible ones) that have display port. Generally no one cares about that stuff though. I use capture cards because I sometimes do stuff involving other computers and it’s 1000 times more convenient to have a vlc window floating around with the display output.

No one seems to have this use case besides me, I’m just glad that there exist capture cards fast enough to do playback in real-time and not a 5 second delay. I don’t stream.

Anyone doing anything with video game consoles has a capture card.

People using other computers usually use RDP, SSH, or a KVM system that also allows control.

the reason why its harder is because devices with display port can basically nayively record without a capture card. other devices where it would be common like consoles design it to be plugged into a tv. and those in the tv business and console business are either in, or fold into the HDMI consortum standards.

this mindset will never change till basically most tv manufacturers put displayport on tvs

Me neither. I play at 1440p/120hz. Both cables can manage that resolution and frame rate.

The only difference I get is when I use DisplayPort and leave my computer alone for 20 mins, the GPU goes to sleep and the monitor won’t display anything although I hear background apps/games running.

Without changing any settings, using a HDMI cable solved that.

HDMI it is.

its starts i matter more when you do more exotic connections. display port is more friendly for merging it into another form factor, and between the two, the only one capable of tech like daisy chaining monitors.

hdmi also requires a licensing fee to use, which technically add a cost to the end user.

Even if HDMI manages the same framerate it will still have a higher latency.

In a multi monitor set up, when a screen connected to display port goes to sleep my computer treats it like that screen was disconnected - meaning all my open applications get shoved from one screen to another. I’ve also used HDMI to avoid that.

On the other hand, when I turn off my second monitor (on HDMI), all my apps stay on that screen, meaning I have to manually move them over to my main monitor where I can actually see them.

And if my DisplayPort monitor is off and everything’s on my second monitor, when I turn the main one back on all the windows go back to where they used to be (al least on Plasma Wayland).

That’s interesting, though not my experience. If a monitor is turned off then the PC picks up that it has disconnected.

The scenario I described occurs when the OS (Windows) sleeps the screen after inactivity. It could be a function of the laptop, of the monitor, or of the cables. In my set up using HDMI over display port solves it.

Ugh, so THAT’S why it happens? It’s quite irritating, and only rebooting seems to fix it.

Why?

Because the cartel members want their super high res content only available with Genuine DRM Bullshit™. The gambit won’t work, of course, but they’re gonna try like hell.

4k @ 120, but 5k @ 240? How does that work?

They probably confused it; 4k 240 and 5k 120 are about the same balpark according to handy dandy chart.

Fuck HDMI!

tomorrow my turn to post about this