Context: jest is a Javascript testing library, mocking is something you do in test in order not to use production services. AI understood both terms in a none programming context

Man, it really is like an extremely dense but dedicated intern. Does not question for a moment why it’s supposed to make fun of an interval, but delivers a complete essay.

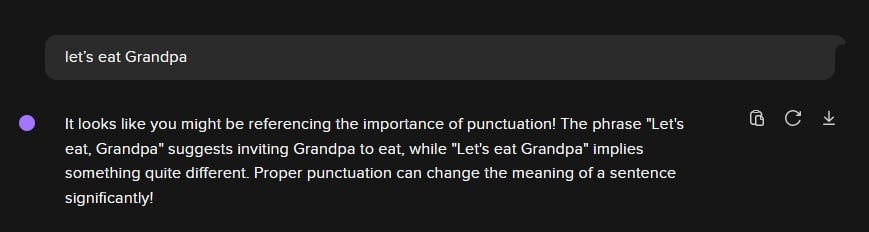

Just make sure to never say “let’s eat Grandpa” around an AI or it’ll have half the leg chomped down before you can clarify that a comma is missing.

Yea I didn’t think about that but if someone said to an AI powered robot “Hey, can you shred my reports?” as they leave work they could easily come back in the morning to it tearing their junior staff into strips like “Morning boss, almost done”.

“How do I help my uncle Jack off a horse?”

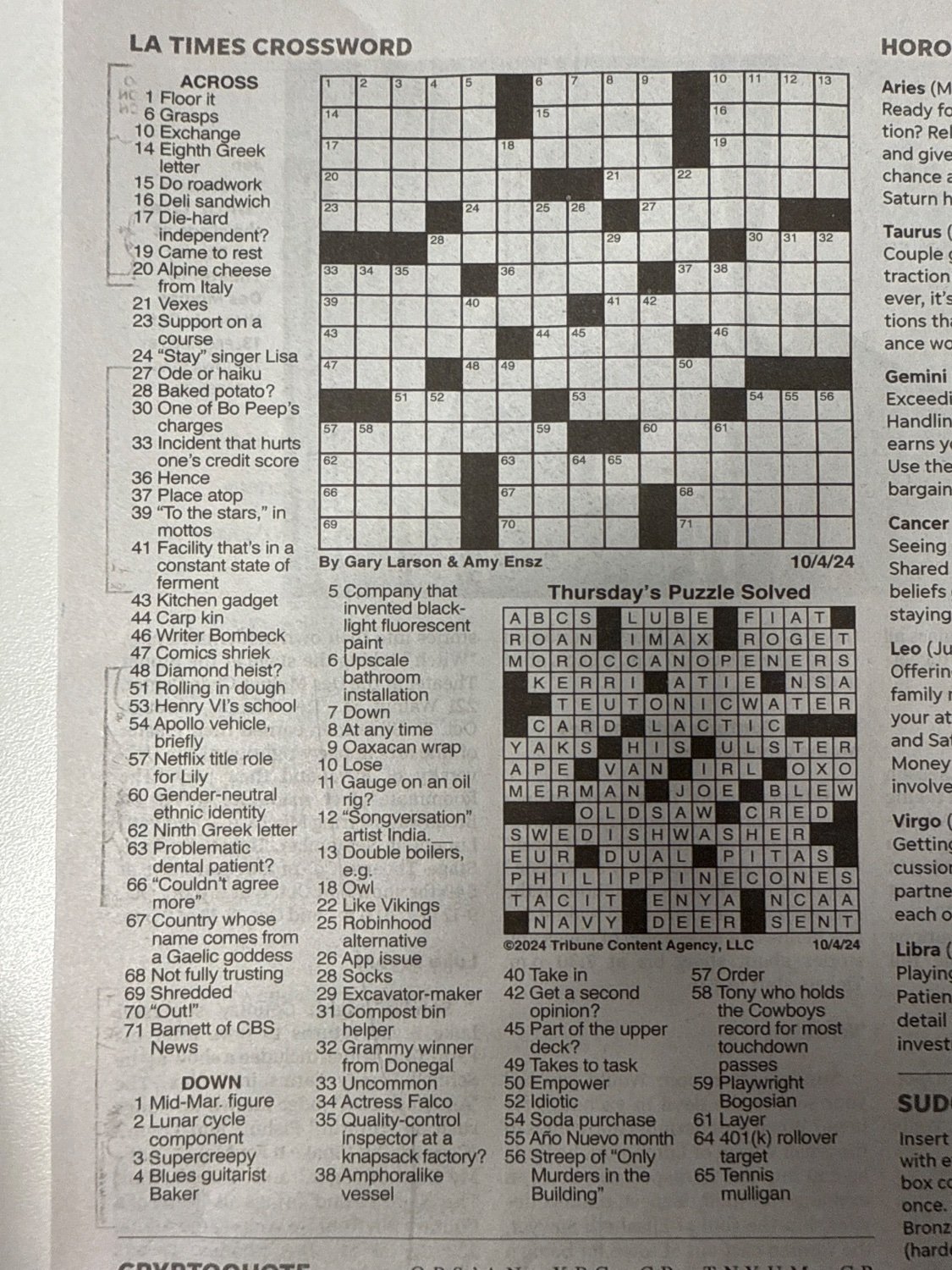

Also never ask it to solve a picture of a crossword.

how’s that?

Not great, honestly.

Yeah, this is the problem with frankensteining two systems together. Giving an LLM a prompt, and giving it a module that can interpret images for it, leads to this.

The image parser goes “a crossword, with the following hints”, when what the AI needs to do the job is an actual understanding of the grid. If one singular system understood both images and text, it could hypothetically understand the task well enough to fetch the information it needed from the image. But LLMs aren’t really an approach to any true “intelligence”, so they’ll forever be unable to do that as one piece.

Well tbf this isn’t what chatgpt is designed for. It can interpret images and give information/advice/whatever, but not solve

puzzlescrossword puzzles entirely.🤔

There’s a difference between helping to solve puzzles and actually solving them.

You have to be more specific:

I later did ask it to just be helpful, specifically requesting it give me some possible words that fit for the 5 letter possibility for #1. It repeated “floor it” lol.

via https://duckduckgo.com/?q=DuckDuckGo+AI+Chat&ia=chat&duckai=1 with GPT-4o mini

As much as I’m still skeptical about “AI taking all of our jobs” anytime soon, interactions like these still blow my mind…

It already has taken tons of graphic jobs. Also look up Graphite by IBM, it’s advertising to companies to hire them to do their code. Which would be taking code jobs. I kind of think people don’t understand how it’s going to be taken as far as it can be taken by these corporations. If half the populace loses their jobs, they just don’t care. I really don’t get on who they think is going to be all of these products and services if no one has jobs, but their following quarter might be better.

That first one reminds me of a part of HHGTTG where I think Ford starts counting in front of a computer to intimidate it because its like walking up to a human and chanting “blood, blood, blood”.

For those as confused as I: HHGTTG = The Hitchhiker’s Guide to the Galaxy

You sound like a hoopy frood that knows where his towel is

I’ll take that as a compliment it obviously is

How I Hit your Grandma Today, Thank God

To be pedantic, Ford’s threat is to “rearrange [the computer’s] memory banks with an axe”

The countdown is until he starts doing it.

Why do i read it out in Codsworth voice in Fallout 4

ChatGPT has gotten scary good with both entirely misunderstanding me in almost the exact way my ND ass does to NTs AND with how well it responds to “no, silly, and please remember this in future”

I don’t use it super often, but every 6mo or so and it’s gotten crazy good at remembering all my little nuances when I have it so shit for me (nothing like research, mostly “restructure data in format a to format b pls”)

No. 5 was a fun mental image

callback executed

💃

For all AI’s faults, this is a bit funny

Quite a comical detour.

The point when the AI hallucinations become useful is the point where I raise my eye brows. This not one of those.

In this case though, it’s not a hallucination, there’s nothing false in that response, it just completely misinterpreted what the user was asking.

LLM got jokes! This had me in tears. Best AI response I’ve ever read hands down.

I would have interpreted this the same way as the AI did FWIW. Then again, I don’t do frontend stuff, and I run when I see TypeScript in my hobby projects because it’s such a pain.